The Manhattan Project involved one of the largest scientific collaborations ever undertaken. Out of it emerged countless new technologies, going far beyond the harnessing of nuclear fission. The development of early computing benefited enormously from the Manhattan Project’s innovation, especially with the Los Alamos laboratory’s developments in the field both during and after the war.

Analog computing

Prior to the advent of modern, digital computers, complex Analog computers were used to perform calculations. Although the word “computer” has come to mean a number of things, Analog and digital computers share the same basic task: calculating and manipulating numbers using logical rules. Analog computers have existed for hundreds of years, and include such simple devices as the slide rule.

Analog computers were vital to work at Los Alamos. Enrico Fermi was renowned for his exceptional skills on his German Brunsviga calculator. When physicist Herbert Anderson bought a faster Marchant calculator, Fermi upgraded too, always wanting to be on the cutting edge.

Analog computers were so integral to the Manhattan Project, and so often used, that they frequently broke down. Physicists Nicholas Metropolis and Richard Feynman set up a kind of computer repair shop, taking apart the machines and working on how to fix jams and breakages. When MED officials discovered Metropolis and Feynman’s outfit, they initially shut it down. They soon realized, however, that their services were vital, and the MED allowed Metropolis and Feynman’s hobby to continue unimpeded.

The Project at Los Alamos also used old punch-card style computers produced by IBM. When the machines were first delivered to the lab, the scientists were skeptical. A race was organized between the IBM machines and the hand-operated computers. Although the two initially kept pace, after about a day of work the hand-operators began to fatigue, while the punch card machines kept working. Fermi eventually became so enamored with the machines that they inspired him to explore the world of digital computing.

The Dawn of Digital: ENIAC

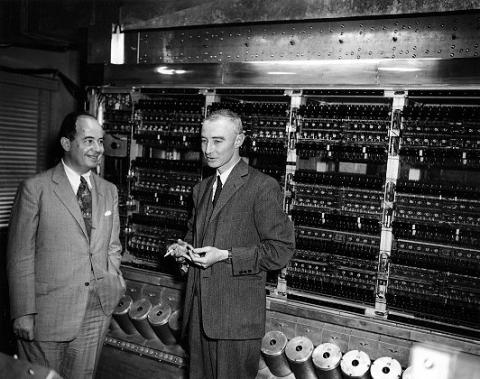

One of the earliest digital computers was brought online on February 14th, 1946, when the University of Pennsylvania announced the “Electronic Numerical Integrator and Computer”: ENIAC. Constructed at the Moore School of Electrical Engineering, ENIAC was built for the purpose of calculating artillery-firing tables, which provided information to help artillerymen aim their weapons. ENIAC weighed more than 60,000 pounds, covered 1800 square feet of area, consumed 150 kilowatts of power, and cost $500,000 to build (about $6,000,000 in today’s dollars).

One of the earliest digital computers was brought online on February 14th, 1946, when the University of Pennsylvania announced the “Electronic Numerical Integrator and Computer”: ENIAC. Constructed at the Moore School of Electrical Engineering, ENIAC was built for the purpose of calculating artillery-firing tables, which provided information to help artillerymen aim their weapons. ENIAC weighed more than 60,000 pounds, covered 1800 square feet of area, consumed 150 kilowatts of power, and cost $500,000 to build (about $6,000,000 in today’s dollars).

For their money, the U.S. Army Ordinance Corps received a processor that could handle 50,000 instructions per second—an iPhone processor, by contrast, can handle about five billion. However, ENIAC did significantly speed up calculation times—artillery calculations that had previously taken twelve hours on a hand calculator could be done in just thirty seconds. ENIAC was intertwined with nuclear science from the beginning: one of its first real uses was by Edward Teller, who used the machine in his early work on nuclear fusion reactions.

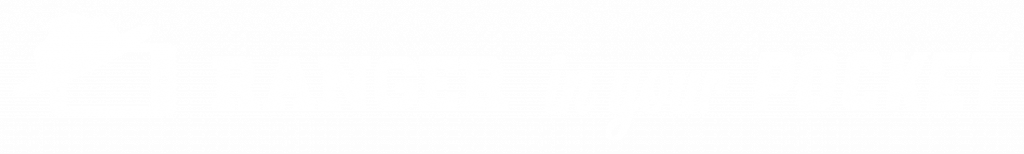

John von Neumann

One of the most important names in the history of computing is John von Neumann, a Hungarian-American polymath and Manhattan Project veteran. Von Neumann joined Princeton’s Institute for Advanced Study (IAS) in 1933, the same year as his mentor, Albert Einstein. Like many of those initially hired by IAS, Von Neumann was a mathematician by training.

During the war, he worked at Los Alamos on the mathematics of explosive shockwaves for the implosion-type Fat Man weapon. He worked with IBM mechanical tabulating machines, tailored for this specific purpose. As he grew familiar with the tabulators, he began to imagine a more general machine, one that could handle far more general mathematical challenges—a computer.

Near the end of the war, von Neumann put together a report on the architecture of such a machine—today, that architecture is still called the von Neumann architecture. His work relied on the thoughts of Alan Turing, a young mathematician at Princeton whose work had defined the limits of computability. His dream of a general machine was already being implemented—in the form of ENIAC.

When von Neumann returned to Princeton after the war, he built the IAS computer, which implemented his von Neumann architecture. Starting in 1945, the IAS computer took six years to build. Meanwhile, the British “Manchester Baby” computer, the first stored program computer, successfully ran its first program in 1948; the Electronic Delay Storage Automatic Calculator (EDSAC) at Cambridge University followed suit in 1949. Once the IAS computer was complete, its basic design was re-implemented in more than twenty different other computers all over the world.

It’s a MANIAC

Von Neumann’s project at Princeton represented reflected a recent surge of interest in computing and its applications in science, technology, mathematics, and weapons manufacturing. The scientists working at Los Alamos—then in pursuit of nuclear fusion weapons—had every reason to join in. In 1951, a team of scientists, led by Nicholas Metropolis, constructed a computer called the Mathematical and Numerical Integrator and Calculator: MANIAC.

MANIAC was substantively smaller than ENIAC: only six feet high, eight feet wide, and weighing in at half a ton. MANIAC was able to store programs, while ENIAC could not. MANIAC’s design was based on the IAS computer, which had originally also been called MANIAC. It therefore used von Neumann’s architecture, making it one of the ancestors of many modern computers.

The “Super”

Although it eventually was used for a variety of purposes, MANIAC’s first job was to perform the calculations for the hydrogen bomb. When Enrico Fermi casually suggested the idea of building a fusion device in the early ‘40s, Edward Teller became fascinated by the idea of designing and constructing a hydrogen bomb. Polish-American mathematician Stanislaw Ulam soon joined Teller to help build the so-called “Super”. Using a design that Oppenheimer had called “technically sweet,” the two created the Teller-Ulam device, which used a fission reaction to ignite a fusion reaction.

MANIAC, along with IAC and ENIAC, was used to perform the engineering calculations required for building the bomb. It took sixty straight days of processing, all through the summer of 1951. On November 1, 1952, the first full-scale thermonuclear device was tested at Elugelab Island. The “Ivy Mike” test vaporized the entire island, as well as eighty million tons of coral. MANIAC’s calculations had been successful.

Even more Innovation

The advent of computing allowed for major innovation in the realm of simulation. Metropolis led a group that developed the Monte Carlo method, which simulates the results of an experiment by using a broad set of random numbers. It was named for the Monte Carlo casino, where Stanislaw Ulam’s uncle often gambled. First invented during the Manhattan Project, the Monte Carlo method had been used on old analog computers. However, that work was slow and time consuming. By using MANIAC, physicists like Fermi and Teller could perform simulations much faster. This allowed for better understandings the behaviors of particles and atoms.

MANIAC was used for innumerable other experiments and discoveries. In 1953 and 1954, it performed analysis that helped discover the Delta particle, a new sub-atomic particle. George Gamow used it for early research into genetics. MANIAC also contributed to advancements in two-dimensional hydrodynamics, iterative functions, and nuclear cascades. And, in 1956, Mark Wells wrote a program to simulate “Los Alamos Chess,” a bishop-less, six-by-six version of the classic board game. In so doing, MANIAC became the first computer to play, and then beat a human, at a chess-like game.